Compact AI Acceleration: Geniatech’s M.2 Module for Scalable Deep Learning

Compact AI Acceleration: Geniatech’s M.2 Module for Scalable Deep Learning

Blog Article

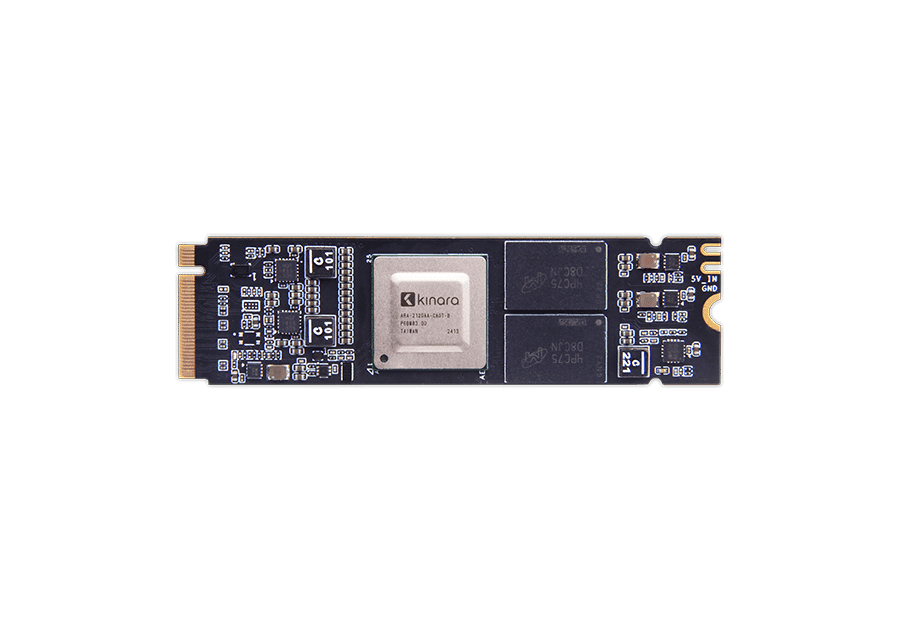

Seamless AI Integration with Geniatech’s Low-Power M.2 Accelerator Module

Synthetic intelligence (AI) remains to revolutionize how industries work, especially at the side, wherever quick handling and real-time ideas aren't just appealing but critical. The AI m.2 module has appeared as a tight however powerful option for handling the needs of edge AI applications. Giving strong performance within a little footprint, this module is easily driving development in from smart cities to commercial automation.

The Importance of Real-Time Handling at the Edge

Edge AI bridges the space between persons, units, and the cloud by allowing real-time knowledge running wherever it's most needed. Whether powering autonomous vehicles, smart protection cameras, or IoT devices, decision-making at the side should arise in microseconds. Traditional research techniques have confronted issues in maintaining these demands.

Enter the M.2 AI Accelerator Module. By integrating high-performance machine learning abilities in to a lightweight sort element, this technology is reshaping what real-time control appears like. It gives the speed and effectiveness organizations require without counting exclusively on cloud infrastructures that could present latency and raise costs.

What Makes the M.2 AI Accelerator Element Stand Out?

• Lightweight Design

One of many standout functions with this AI accelerator component is their compact M.2 type factor. It meets quickly into many different stuck methods, servers, or edge products without the need for considerable hardware modifications. That makes arrangement easier and a lot more space-efficient than greater alternatives.

• High Throughput for Device Learning Tasks

Designed with sophisticated neural network handling capabilities, the element offers extraordinary throughput for jobs like image acceptance, video examination, and presentation processing. The architecture assures seamless handling of complex ML designs in real-time.

• Power Efficient

Power usage is just a major concern for side units, specially those who perform in remote or power-sensitive environments. The component is optimized for performance-per-watt while sustaining regular and reliable workloads, which makes it suitable for battery-operated or low-power systems.

• Versatile Applications

From healthcare and logistics to wise retail and production automation, the M.2 AI Accelerator Module is redefining opportunities across industries. Like, it forces sophisticated movie analytics for smart monitoring or helps predictive maintenance by analyzing warning data in industrial settings.

Why Edge AI is Gaining Momentum

The rise of side AI is reinforced by growing information amounts and an raising quantity of connected devices. Based on recent market numbers, you will find over 14 thousand IoT units running internationally, a number estimated to surpass 25 thousand by 2030. With this shift, traditional cloud-dependent AI architectures face bottlenecks like improved latency and privacy concerns.

Side AI eliminates these challenges by running data domestically, providing near-instantaneous insights while safeguarding person privacy. The M.2 AI Accelerator Component aligns completely with this trend, enabling corporations to utilize the full potential of edge intelligence without reducing on functional efficiency.

Critical Statistics Showing their Impact

To understand the influence of such systems, consider these features from new business studies:

• Development in Side AI Market: The world wide side AI hardware market is believed to cultivate at a element annual growth rate (CAGR) exceeding 20% by 2028. Products like the M.2 AI Accelerator Module are critical for operating that growth.

• Efficiency Criteria: Labs testing AI accelerator modules in real-world scenarios have shown up to and including 40% development in real-time inferencing workloads in comparison to mainstream edge processors.

• Ownership Across Industries: Around 50% of enterprises deploying IoT products are likely to incorporate side AI programs by 2025 to enhance operational efficiency.

With such figures underscoring its relevance, the M.2 AI Accelerator Module appears to be not only a instrument but a game-changer in the change to smarter, faster, and more scalable side AI solutions.

Groundbreaking AI at the Edge

The M.2 AI Accelerator Module represents more than just another bit of hardware; it's an enabler of next-gen innovation. Companies adopting this technology may remain in front of the contour in deploying agile, real-time AI systems completely improved for side environments. Compact yet strong, oahu is the ideal encapsulation of progress in the AI revolution.

From their capability to method equipment understanding versions on the travel to their unparalleled flexibility and power performance, that component is showing that edge AI is not a distant dream. It's occurring today, and with instruments like this, it's easier than ever to create smarter, faster AI nearer to where in actuality the action happens. Report this page